Section 4.2.0 - Introductory Writeups

Section 4.2.0.1 - Basic Writeups

Section 4.2.0.1.1 - Part 1 - Wikipedia - What is an ANN?

Section 4.2.0.1.2 - Part 2 - Asimov Institute - Cells and Layers of ANNs

Section 4.2.0.1.3 - Part 3 - Asimov Institute - Some examples of ANNs

Section 4.2.0.2 - Detailed Writeups

Section 4.2.0.2.1 - Part 1 - Textbook - David Kriesel - A Brief Introduction to Neural Networks

Section 4.2.0.2.2 - Part 2 - Textbook - Michael Nielsen - Neural Networks and Deep Learning

Section 4.2.0.2.3 - Part 3 - Edited by Kenji Suzuk - ANN: Architecture and Applications

Section 4.2.0.2.4 - Part 4 - Text Book - Simon Haykin - Neural Networks and Learning Machines

Section 4.2.0.2.5 - Part 5 - Online Text Book - Zhang,Lipton,Li,Smola - Dive Into Deep Learning

Section 4.2.0.2.6 - Part 6 - Complex-Valued Neural Networks

Section 4.2.0.2.6.1 - Part 1 - Trabelsi,Bilaniuk,Zhang,Serdyuk,Subramanian,Santos,Mehri,Rostamzadeh,Bengio, Pal - Deep Complex Networks

Section 4.2.0.2.6.2 - Part 2 - Thesis - Nitzan Guberman - On Complex Valued CNN's

Section 4.2.0.2.6.3 - Part 3 - Monning, Manandhar - Evaluation of Complex-Valued Neural Networks on Real-Valued Classification Tasks

Section 4.2.0.2.6.4 - Part 4 - Monning,Manandhar - Comparison of the Complex Valued and Real Valued Neural Networks Trained with Gradient Descent and Random Search Algorithms

Section 4.2.0.2.6.5 - Part 5 - El-Telbany,Refat - Complex-Valued Neural Networks Training: A Particle Swarm Optimization Strategy

Section 4.2.0.2.6.6 - Part 6 - Aizenberg - Complex-Valued Neural Networks II. Multi-Valued Neurons: Theory and Applications

Section 4.2.0.2.6.7 - Part 7 - Minin,Knoll,Zimmermann - Complex Valued Recurrent Neural Network: From Architecture to Training

Section 4.2.0.2.7 - Part 7 - Li,Xu,Taylot,Studer,Goldstein - Visualizing the Loss Landscape of Neural Networks

Section 4.2.1 - Feedforward Networks

Section 4.2.1.0 - Basic Writeup - Wikipedia - Feed Forward Neural Network

Section 4.2.1.1 - Neural Backprop

Section 4.2.1.1.0 - Basic Writeup - Wikipedia - Neural Back Propogation

Section 4.2.1.1.1 - Backprop

Section 4.2.1.1.1.0 - Introductory Writeups

Section 4.2.1.1.1.0.1 - Basic Writeup - Wikipedia - Backpropogation

Section 4.2.1.1.1.0.2 - Detailed Writeup - Rumelhart,Durbin,Golden,Chauvin - Backpropogation: The Basic Theory

Section 4.2.1.1.1.1 - Delta Rule

Section 4.2.1.1.1.2 - Chain Rule

Section 4.2.1.1.1.3 - Gauss–Newton algorithm

Section 4.2.1.1.2 - Cascade Correlation

Section 4.2.1.1.3 - Quickprop

Section 4.2.1.1.4 - Resilient backprop (RPROP)

Section 4.2.1.2 - Linear and Non-Linear

Section 4.2.1.2.0 - Basic Writeups

Section 4.2.1.2.0.1 - Part 1 - Unknown - Non-Linear Feedforward Control

Section 4.2.1.2.0.2 - Part 2 - Whitman - Linear Neural Networks

Section 4.2.1.2.0.3 - Part 3 - Grossberg - NonLinear Neural Networks

Section 4.2.1.2.1 - Radial Based Function (RBF) networks (Linear Neural Networks)

Section 4.2.1.2.1.0 - Introductory Writeups

Section 4.2.1.2.1.0.1 - Basic Writeup - Wikipedia - Radial Basis Function Network

Section 4.2.1.2.1.0.2 - Detailed Writeup - Orr - Introduction to Radial Based Function Networks

Section 4.2.1.3 - Perceptron

Section 4.2.1.4 - Adaline and Madaline

Section 4.2.1.5 - Higher Order Neural Networks

Section 4.2.1.5.0 - Introductory Writeups

Section 4.2.1.5.0.1 - Basic Writeup

Section 4.2.1.5.0.2 - Detailed Writeup - Gupta,Bukovsky,Homma,Solo,Hou - Fundamentals of Higher Order Neural Networks for Modeling and Simulation

Section 4.2.1.5.1 - Sigma-Pi Networks

Section 4.2.1.5.1.0 - Basic Writeup - Univ of Pretoria - High Order Neural Networks

Section 4.2.1.5.1.1 - Sigma-Pi-Sigma Networks

Section 4.2.1.5.2 - Pi-Sigma Networks

Section 4.2.1.5.2.0 - Basic Writeup - Univ of Pretoria - High Order Neural Networks

Section 4.2.1.5.2.1 - Jordan Pi-Sigma Network

Section 4.2.1.5.3 - Functional Link

Section 4.2.1.5.4 - Second Order

Section 4.2.1.5.5 - Product Unit

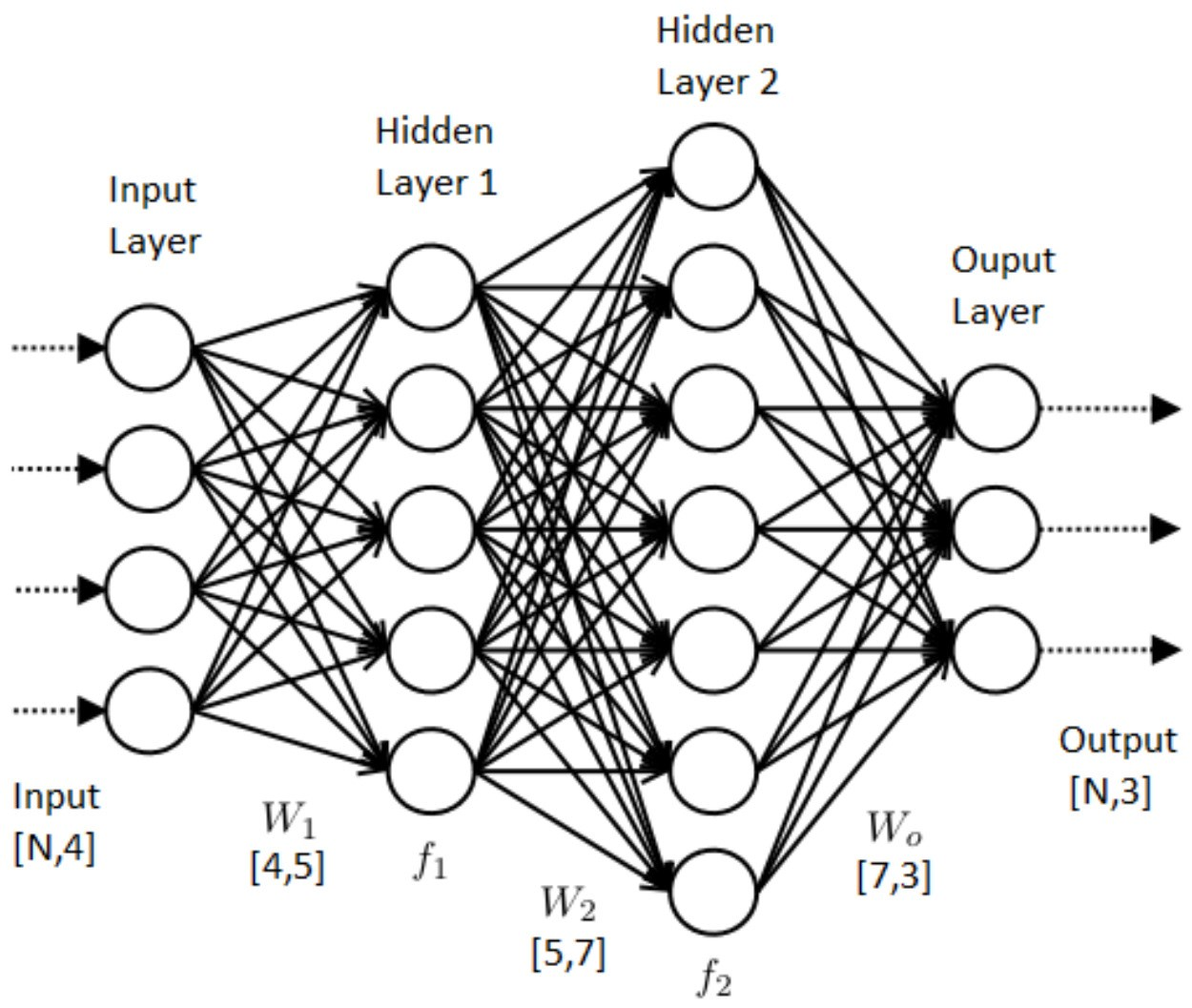

Section 4.2.1.6 - MLP: Multilayer perceptron

Section 4.2.1.6.0 - Introductory Writeups

Section 4.2.1.6.0.1 - Basic Writeups

Section 4.2.1.6.0.1.1 - Part 1 - Ujjwalkarn - A Quick Introduction oto Neural Networks

Section 4.2.1.6.0.1.2 - Part 2 - Wikipedia - Multilayer Perceptron

Section 4.2.1.6.0.2 - Detailed Writeup - Textbook Chapter 3 - Principe,Euliano,Lefebvre - Multilayer Perceptrons

Section 4.2.1.7 - Convolutional Neural Network

Section 4.2.1.7.0 - Introductory Writeups

Section 4.2.1.7.0.1 - Basic Writeup - Wikipedia - Convolutional Neural Network

Section 4.2.1.7.0.2 - Detailed Writeup - Wu - Convolutional Neural Networks

Section 4.2.1.7.1 - Design of CNN's

Section 4.2.1.7.2 - Building Blocks of CNN's

Section 4.2.1.7.3 - Intuitive Explanations and Demos of CNN's

Section 4.2.1.7.4 - Types of Convolutions

Section 4.2.1.7.4.0 - Basic Writeup - DeepLearning.net - Convolutional Arithmetic

Section 4.2.1.7.4.1 - Discrete Convolutions

Section 4.2.1.7.4.2 - Transposed Convolutions

Section 4.2.1.7.4.3 - Dilated Convolutions

Section 4.2.1.7.4.4 - Grouped Convolutions

Section 4.2.1.7.4.5 - Seperable Convolutions

Section 4.2.1.7.5 - Deep Convolutional Inverse Graphics Network

Section 4.2.1.7.6 - Convolutional Deep Q-networks

Section 4.2.1.7.7 - Convolutional Deep Belief Network

Section 4.2.1.8 - CMAC: Cerebellar Model Articulation Controller

Section 4.2.1.10 - Pure Classification only

Section 4.2.1.10.1 - LVQ: Learning Vector Quantization

Section 4.2.1.10.2 - PNN: Probabilistic Neural Network

Section 4.2.1.11 - Non Parametric Regression

Section 4.2.1.11.1 - GNN: General Regression Neural Network

Section 4.2.1.12 - Hinton Capsule Based

Section 4.2.1.12.0 - Introductory Writeups

Section 4.2.1.12.0.1 - Basic Writeup - Max Pechyonkin - Understanding Hinton's Capsule Networks: Intuition

Section 4.2.1.12.0.2 - Detailed Writeups

Section 4.2.1.12.0.2.1 - Part 1 - Wikipedia - Hinton's Capsule Neural Network

Section 4.2.1.12.0.2.2 - Part 2 - Hinton,Krizhevsky,Wang - Transforming Auto-Encoders

Section 4.2.1.13 - Extreme Learning Machine

Section 4.2.1.14 - Residual Network

Section 4.2.1.14.0 - Basic Writeup - Wikipedia - Residual Neural Network

Section 4.2.1.14.1 - Deep Residual

Section 4.2.1.14.2 - Highway Networks

Section 4.2.2 - Feedback or Recurrent Neural Networks

Section 4.2.2.0 - Basic Writeup - Wikipedia - Recurrent Neural Network

Section 4.2.2.1 - Neural Turing Machine

Section 4.2.2.2 - BAM: Bidirectional Associative Memory

Section 4.2.2.3 - Boltzman Machine

Section 4.2.2.3.0 - Introductory Writeups

Section 4.2.2.3.0.1 - Basic Writeup - Wikipedia - Boltzmann Machine

Section 4.2.2.3.0.2 - Detailed Writeup - Hinton - Boltzmann Machines

Section 4.2.2.3.1 - Restricted Boltzman Machine

Section 4.2.2.3.1.0 - Introductory Writeups

Section 4.2.2.3.1.0.1 - Basic Writeups

Section 4.2.2.3.1.0.1.1 - Part 1 - Skymind.ai - Restricted Boltzmann Machine

Section 4.2.2.3.1.0.1.2 - Part 2 - Wikipedia - Restricted Boltzmann Machine

Section 4.2.2.3.1.0.2 - Detailed Writeups

Section 4.2.2.3.1.0.2.1 - Part 1 - Deeplearning Net - Restricted Boltzmann Machines

Section 4.2.2.3.1.0.2.2 - Part 2 - Mont´ufar - Restricted Boltzmann Machines: Introduction and Review

Section 4.2.2.3.1.1 - Conditional Restricted Boltzman Machine

Section 4.2.2.3.1.2 - Mean Field Theory of Restricted Boltzman Machine

Section 4.2.2.3.1.3 - Deep Belief Networke

Section 4.2.2.3.2 - Deep Boltzman Machine

Section 4.2.2.3.3 - High Order Boltzman Machine

Section 4.2.2.3.4 - Non-Binary Units Boltzman Machine

Section 4.2.2.4 - Gated Recurrent Unit

Section 4.2.2.4.0 - Introductory Writeups

Section 4.2.2.4.0.1 - Basic Writeups

Section 4.2.2.4.0.1.1 - Part 1 - Wikipedia - Gated Recurrent Unit

Section 4.2.2.4.0.1.2 - Part 2 - Zhang,Lipton,Li,Smola - Dive Into Deep Learning: Gated Recurrent Unit

Section 4.2.2.4.0.2 - Detailed Writeup - Chung, Gulcehre, Cho, Bengio - Gated Feedback Recurrent Neural Networks

Section 4.2.2.4.1 - Deep Gate Recurrent Neural Network

Section 4.2.2.5 - Hopfield Network

Section 4.2.2.5.0 - Introductory Writeups

Section 4.2.2.5.0.1 - Basic Writeup - Wikipedia - Hopfield Network

Section 4.2.2.5.0.2 - Detailed Writeup - Textbook Chapter 13 - Rojas - Neural Networks: The Hopfield Model

Section 4.2.2.6 - Recurrent time series

Section 4.2.2.6.0 - Basic Writeup - Petnehazi - Recurrent Neural Networks for Time Series Forecasting

Section 4.2.2.6.1 - Long Short Term Memory (LSTM)

Section 4.2.2.6.1.0 - Introductory Writeups

Section 4.2.2.6.1.0 - Basic Writeups

Section 4.2.2.6.1.0.1.1 - Part 1 - Wikipedia - Long Short Term Memory

Section 4.2.2.6.1.0.1.2 - Part 2 - Skymind.ai - LSTM

Section 4.2.2.6.1.0.2 - Detailed Writeup - Hochreiter,Schmidhuber - Long Short-Term Memory

Section 4.2.2.6.2 - Simple Recurrent Networks

Section 4.2.2.6.2.1 - Elman Network

Section 4.2.2.6.2.2 - Jordan Network

Section 4.2.2.6.3 - FIR: Finite Impulse Response Neural Net

Section 4.2.2.6.4 - Real-time Recurrent Network

Section 4.2.2.6.5 - Recurrent Backprop

Section 4.2.2.6.6 - TDNN: Time Delay NN

Section 4.2.2.7 - Liquid State Machine

Section 4.2.2.8 - Echo State Network

Section 4.2.2.9 - Bidirectional Recurrent Neural Network

Section 4.2.3 - Memory Networks

Section 4.2.3.1 - Attention Mechanism

Section 4.2.3.1.0 - Basic Writeup

Section 4.2.3.1.0.1 - Part 1 - Skymind - Attention Mechanisms

Section 4.2.3.1.0.2 - Part 2 - Mahendra Venkatachalam - Attention In Neural Networks

Section 4.2.3.1.0.3 - Part 3 - Buomsoo Kim - Attention In Neural Networks - Part 1

Section 4.2.3.1.1 - Detailed Writeup - Zhang,Lipton,Li,Smola - Dive into Deep Learning: Attention Mechanism

Section 4.2.3.1.2 - Hierarchical Attention Networks

Section 4.2.3.1.3 - Graph Attention Networks

Section 4.2.3.1.4 - Transformer Attention Networks

Section 4.2.3.1.4.1 - Detailed Writeups

Section 4.2.3.1.4.1.1 - Part 1 - Zhang,Lipton,Li,Smola, - Transformer Attention Mechanism

Section 4.2.3.1.4.1.2 - Part 2 - Vaswani,Shazer,Parmar,Uszkoreit,Jones,Gomez,Kaiser - Attention is All You Need

Section 4.2.3.1.4.1.3 - Part 3 - Devlin,Chang,Lee,Toutanova - Bidirectional Encoder Representations from Transformer Attention Networks(BERT)

Section 4.2.3.1.4.1.4 - Part 4 - Dai,Yang,Yan,Carbonell,Le,Salakhutdinov - Transformer-XL: Attentitive Language Models Beyond a Fixed-Length Context

Section 4.2.3.1.4.1.5 - Part 5 - Yang,Dai,Yang,Carbonell,Salakhutdinov,Le - XLNet: Generalized Autoregressive Pretraining for Language Understanding

Section 4.2.4 - Competitive Networks

Section 4.2.4.0 - Basic Writeup - Wikipedia - Competitive Learning

Section 4.2.4.1 - Adaptive Resonance Theory

Section 4.2.4.2 - Counterpropagation

Section 4.2.4.3- Neocognitron

Section 4.2.4.4 - Vector Quantization

Section 4.2.4.4.0 - Basic Writeup - Vector Quantization

Section 4.2.4.4.1 - Grossberg Competitive Learning

Section 4.2.4.4.2 - Kohonen Self Organizing Maps

Section 4.2.4.4.3 - Conscience Competitive Learning

Section 4.2.4.5 - Self-Organizing Map

Section 4.2.4.5.0 - Basic Writeup - Wikipedia - Self Organizing Map

Section 4.2.4.5.1 - Kohonen Self Organizing Maps

Section 4.2.4.5.2 - Generative Topographics Map (GTM)

Section 4.2.4.5.3 - Local Linear

Section 4.2.4.6 - DCL: Differential Competitive Learning

Section 4.2.5 - Adversarial Networks

Section 4.2.5.1 - Generative Adversarial Networks

Section 4.2.5.1.0 - Introductory Writeups

Section 4.2.5.1.0.1 - Basic Writeups

Section 4.2.5.1.0.1.1 - Part 1 - Geeks For Geeks - Generative Adversarial Networks (GANS)

Section 4.2.5.1.0.1.2 - Part 2 - Fritz.ai - An Introduction to GANS

Section 4.2.5.1.0.1.3 - Part 3 - Hui - GAN Series (from the beginning to the end)

Section 4.2.5.1.0.2 - Detailed Writeups

Section 4.2.5.1.0.2.1 - Part 1 - Goodfellow,Abadie,Mirza,Xu,Farley,Ozair†,Courville,Bengio - Generative Adversarial Nets

Section 4.2.5.1.0.2.2 - Part 2 - Ali - Pros and Cons of GAN Evaluation Measures

Section 4.2.5.1.0.2.3 - Part 3 - Barrat,Sharma - Note on the Inception Score

Section 4.2.5.1.1 - Deep Convolutional GANs (DCGANs)

Section 4.2.5.1.2 - Conditional GANs (cGANs)

Section 4.2.5.1.2.0 - Detailed Writeup - Miraz,Osindero - Conditional GANs (cGANs)

Section 4.2.5.1.2.1 - InfoGAN

Section 4.2.5.1.2.2 - Auxillary classifier GAN

Section 4.2.5.1.2.3 - Semi-Supervised GAN

Section 4.2.5.1.3 - StackGAN

Section 4.2.5.1.4 - GAN Cost Functions and Loss

Section 4.2.5.1.4.1 - Wasserstein GANs(WGAN)

Section 4.2.5.1.4.2 - Least Squares GAN(LSGAN)

Section 4.2.5.1.4.3 - Energy Based GAN (EBGAN)

Section 4.2.5.1.4.4 - Boundary Equilibrium GAN(BEGAN)

Section 4.2.5.1.4.5 - Boundary Equilibrium GAN(BEGAN)

Section 4.2.5.1.4.6 - Relativistic GAN(R-Standard GAN, R-Average GAN)

Section 4.2.5.1.5 - Image Translation and Advanced GANs

Section 4.2.5.1.5.1 - Px2Pix GAN

Section 4.2.5.1.5.2 - CycleGAN

Section 4.2.5.1.5.3 - BigGAN

Section 4.2.5.1.5.4 - Progessively Growing GAN

Section 4.2.5.1.5.5 - StyleGAN

Section 4.2.6 - Deep Cognitive Models

Section 4.2.6.1 - Hierarchical Temporal Memory

Section 4.2.6.1.0 - Introductory Writeups

Section 4.2.6.1.0.1 - Basic Writeup - Wikipedia - Hierarchical Temporal Memory

Section 4.2.6.1.0.2 - Detailed Writeups

Section 4.2.6.1.0.2.1 - Part 1 - Hawkings,George - Hierarchical Temporal Memory

Section 4.2.6.1.0.2.2 - Part 2 - Mnatzaganian,Kudithipudi,Fokoue - A Mathematical Formalization of Hierarchical Temporal Memory’s Spatial Pooler

Section 4.2.6.2 - Hebbian

Section 4.2.6.2.0 - Basic Writeup - Wikipedia - Hebbian Theory

Section 4.2.6.2.1 - Hebbian Plasticity

Section 4.2.6.2.2 - Hebbian Learning

Section 4.2.6.2.3 - Spike Time Dependent Plasticity

Section 4.2.6.2.3.0 - Introductory Writeups

Section 4.2.6.2.3.0.1 - Basic Writeups

Section 4.2.6.2.3.0.1.1 - Part 1 - Wikipedia - Spike Timing Dependent Plasticity

Section 4.2.6.2.3.0.1.2 - Part 2 - Wikipedia - Spiking Neural Network

Section 4.2.6.2.3.0.1.3 - Part 3 - Pfieffer,Pfiel - Deep Learning With Spiking Neurons: Opportunities and Challenges

Section 4.2.6.2.3.0.2 - Detailed Writeups

Section 4.2.6.2.3.0.2.1 - Part 1 - A Slide Deck

Section 4.2.6.2.3.0.2.2 - Part 2 - Tavanaei,Ghodrati,Kheradpisheh,Masquelier,Maida - Deep Learning in Spike Neural Networks

Section 4.2.6.2.3.0.2.3 - Part 3 - Ponulak,Kasiński - Introduction to spiking neural networks: Information processing, learning and applications

Section 4.2.6.2.3.0.2.4 - Part 4 - Vreeken - Spriking Neural Networks, an introduction

Section 4.2.6.2.4 - Oja

Section 4.2.6.2.5 - Sanger or Generalized Hebbian

Section 4.2.6.2.6 - Generalized Differential Hebbian

Section 4.2.6.2.7 - BCM Theory

Section 4.2.7 - Autoencoders

Section 4.2.7.0 - Basic Writeup - Wikipedia - Autoencoder

Section 4.2.7.1 - Denoising autoencoder

Section 4.2.7.2 - Sparse autoencoder

Section 4.2.7.3 - Variational autoencoder (VAE)

Section 4.2.7.4 - Contractive autoencoder (CAE)

Section 4.2.7.5 - Bidirectional LSTM autoencoder

Section 4.2.7.6 - Bidirectional Encoder Representations from Transformer Attention Networks (BERT)

Section 4.2.8 - Autoassociators

Section 4.2.8.0 - Basic Writeup - Univ of Minnesota - Introduction to Neural Networks: Heteroassociation and Autoassociation

Section 4.2.8.1 - Generalized autoassociator

Section 4.2.8.2 - BSB: Brain State in a Box

Section 4.2.8.3 - Hopfield

Section 4.2.9 - Co-operative Neural Networks

Section 4.2.9.0 - Basic Writeup - Shrivastava,bart,Price,Dai,Dai,Aluru - Cooperative Neural Networks (CoNN)

Section 4.2.9.1 - Cooperative Evolution of Neural Ensembles

Section 4.2.10 - Siamese Neural Networks

Section 4.2.10.0 - Introductory Writeups

Section 4.2.10.0.1 - Basic Writeup - Wikipedia - Siamese Network

Section 4.2.10.0.2 - Detailed Writeup - Chopra,Hadsell,LeCun - Learning a Similarity Metric Discriminatively, with Application to Face Verification

Section 4.2.11 - Triplet Neural Networks

Section 4.2.12 - Activation Functions

Section 4.2.12.0 - Basic Writeup - Wikipedia - Activation Functions

Section 4.2.12.1 - Ridge Functions

Section 4.2.12.1.0 - Basic Writeup - Wikipedia - Ridge Functions

Section 4.2.12.1.1 - Step Functions

Section 4.2.12.1.1.1 - Heaveside (Binary) Functions

Section 4.2.12.1.2 - Linear Functions

Section 4.2.12.1.2.1 - Linear

Section 4.2.12.1.2.2 - Identity

Section 4.2.12.1.3 - Partly Linear Functions

Section 4.2.12.1.3.1 - Rectifier Based Linear Functions

Section 4.2.12.1.3.1.1 - Rectifier

Section 4.2.12.1.3.1.2 - Leaky Rectifier Based

Section 4.2.12.1.3.1.2.1 - Leaky Rectifier

Section 4.2.12.1.3.1.2.2 - Parametric Leaky Rectifier

Section 4.2.12.1.3.1.2.3 - Randomized Leaky Rectifier

Section 4.2.12.1.3.1.3 - Gaussian Error Rectifier

Section 4.2.12.1.3.1.4 - Sigmoid Rectifier

Section 4.2.12.1.3.1.5 - SoftPlus

Section 4.2.12.1.3.1.6 - Exponential Based

Section 4.2.12.1.3.1.6.1 - Exponential

Section 4.2.12.1.3.1.6.2 - Scaled Exponential

Section 4.2.12.1.3.2 - Softshrink

Section 4.2.12.1.3.3 - Adaptive Piecewise Linear

Section 4.2.12.1.3.4 - Long Short-Term Memory Unit Based

Section 4.2.12.1.3.5 - Bent Identity

Section 4.2.12.1.4 - Non-Linear Functions

Section 4.2.12.1.4.1 - Sigmoid Functions

Section 4.2.12.1.4.1.0 - Basic Writeup - Wikipedia - Sigmoid Functions

Section 4.2.12.1.4.1.1 - Logistic

Section 4.2.12.1.4.1.2 - Generalized Logistic

Section 4.2.12.1.4.1.3 - Gudermannian

Section 4.2.12.1.4.1.4 - Hyperbolic Tangent Based

Section 4.2.12.1.4.1.4.1 - TanH

Section 4.2.12.1.4.1.4.2 - Hard TanH

Section 4.2.12.1.4.1.4.3 - ArcTan

Section 4.2.12.1.4.1.5 - Error

Section 4.2.12.1.4.1.6 - SmoothStep

Section 4.2.12.1.4.1.7 - Softsign

Section 4.2.12.1.4.1.8 - Swish

Section 4.2.12.1.4.2 - Algebraic Functions

Section 4.2.12.1.4.2.1 - Square Non-Linearity

Section 4.2.12.1.4.3 - Exponential Functions

Section 4.2.12.1.4.3.1 - Soft Exponential

Section 4.2.12.1.4.3.2 - Softmax

Section 4.2.12.1.4.1 - Circular Functions

Section 4.2.12.1.4.1.1 - SinC

Section 4.2.12.2 - Radial Functions

Section 4.2.12.2.0 - Basic Writeup - Wikipedia - Radial Functions

Section 4.2.12.2.1 - Infinitely Smooth

Section 4.2.12.2.1.1 - Gaussian

Section 4.2.12.2.1.2 - Inverse Multi-Quadratic

Section 4.2.12.2.1.3 - Multi-Quadratic

Section 4.2.12.2.2 - Poly-harmonic Spline

Section 4.2.12.2.3 - Thin Plate Spline

Section 4.2.12.2.4 - Compactly Supported RBFs

Section 4.2.12.2.4.1 - Bump Function

Section 4.2.12.3 - Multi-Fold Functions

Section 4.2.12.3.0 - Basic Writeup - Wikipedia - Multi-Fold Functions

Section 4.2.12.3.1 - Linear Folds

Section 4.2.12.3.2 - Tree-Like Folds

Section 4.2.12.3.3 - Maxout